During my puzzle game process, I came up with a way that I could use a single Photoshop document and from this create larger sprites for iPads and Macs and smaller versions for iPhones.

Turns out that this might have had a case of Premature Optimisation (ladies…).

Contrary to every tutorial on the internet involving SpriteKit (not to mention every answer on Stack Overflow), I had somehow got it in to my head that using iPad-sized assets and scaling them down by 50+% to fit them on a phone was terribly wasteful and would cause phones to crash and for players to be sad.

I mean, it is terribly wasteful. So that bit was right.

The crashing and sad players part, not so much.

What I Thought Was the Problem

For my game development, I am choosing to go with a 19.5:9 aspect ratio for my Photoshop documents. This meant that the left and right edges were cut off to varying degrees on anything that wasn’t an iPhone X-style device.

The alternative that many tutorials use is to go with a 4:3 aspect ratio and have the top and bottom of the scene cut off on anything that wasn’t an iPad. See this post for examples.

(The contrarian in me thinks it’s easier to design additional non-critical things at the left and right edges of a scene than at the top and bottom, so I went with 19.5:9—but the general problem of oversized sprites applies either way.)

An iPad Pro has 2048 vertical pixels. For a 19.5:9 aspect ratio, that means 4438 horizontal pixels.

Them’s alotta pixels, boss.

The iPhone XS Max has the most pixels in any Apple phone in the history of Apple phones ever.

How many?

2688 x 1242

That means that sprites designed to look sharp on an iPad Pro will still be scaled down by almost 50% on this, their highest pixel count phone ever.

By contrast, the iPhone 7 has a measly 1334 x 750 pixels.

Why would I use the same sprite designed for a 2732 x 2048 pixel display on a device that has a 1334 x 750 pixel display?

Because SpriteKit is Insanely Efficient You Donut

So, after spending hours trying to optimise my asset development, I actually tried it out on my iPhone 7.

First, I created a scene with three different iPad-sized textures and a way of adding hundreds of them really quickly.

Of course, SpriteKit didn’t even blink. It’s very good at knowing when textures are being reused, even if they are being used by different nodes.

So I then created thirty individual textures and tried the same thing.

I think it got up to around 450 nodes on screen before the frame rate started sputtering. It started really tanking at around 1,000 nodes and was down to 15fps at 2,500.

Still didn’t crash, though.

Shouldn’t I be Thinking in Points, Not Pixels, Anyway?

Yes.

No.

Here’s the thing:

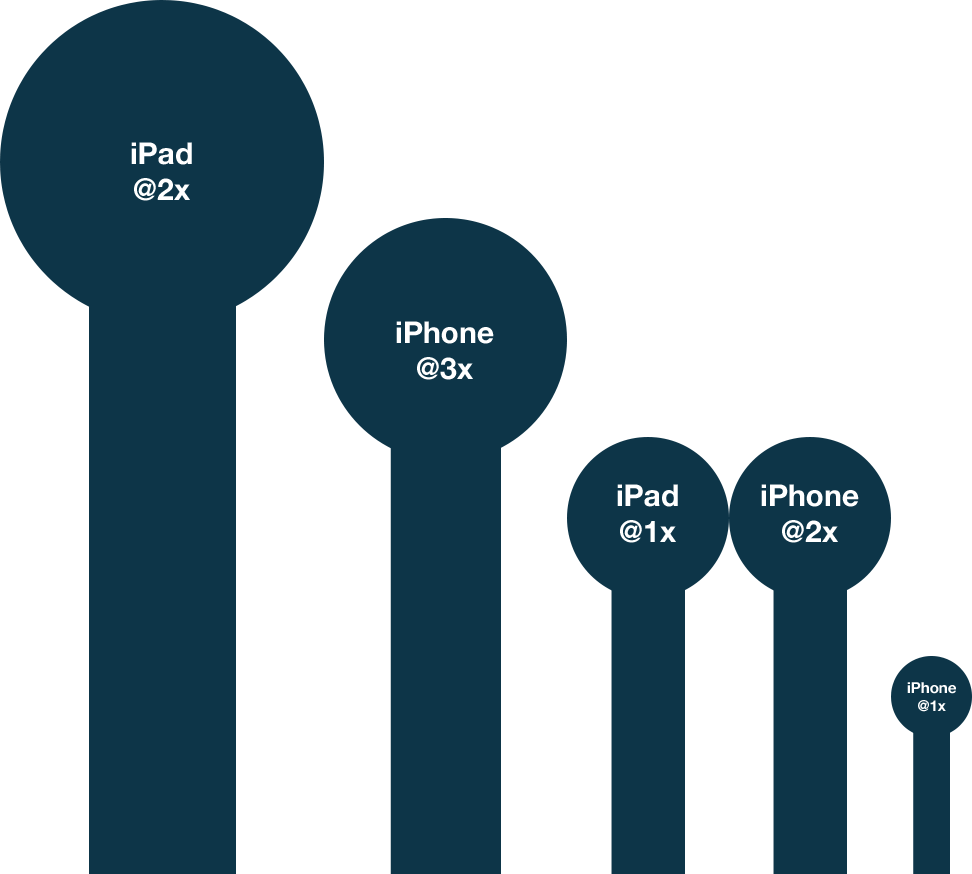

All the current iPads and many of the iPhones use the same 2x scale but the iPads simply have a lot more pixels

In an ideal world, I’d design a big bootiful Photoshop document at 4438 x 2048 then create assets with the following scene sizes in mind:

1110 x 512 (@1x)

2219 x 1024 (@2x)

3329 x 1536 (@3x)

2219 x 1024 (@2x—equivalent to iPad @1x)

4438 x 2048 (@4x—equivalent to iPad @2x)

I would set up my game scene as being the 1x size 1110 x 512 and then let iOS pull out the correct asset for the correct device scale (e.g. on an iPhone XR, it would pull out the 2x phone asset. On an iPhone X Max, the 3x phone asset. On an iPad Pro, the hypothetical 4x iPad asset).

There would still be some minor scaling, but the actual pixel dimensions of the textures would be at a much better size for the device the game is targeting.

When setting up my scene, I would be thinking in points. All of the sprites would be positioned within the 1110 x 512 space. Every device, including the iPads, would have the same points dimensions.

It’s just on the iPad the actual assets that would be loaded would be 4x the size and would look very crisp on those big, beautiful displays with the bonus that the game would function identically on all devices.

Asset Catalogs Do This Already!

Asset catalogs in Xcode have buckets for iPads already, that much is true.

I can create iPad-specific assets. I can make those assets be 4x the size of the phones. I know that iOS will use them if the game is running on an iPad:

Problem solved?

Kind of.

When I set up the scene, I explicitly set the size of the node.

I said to SpriteKit, I said: “The size of this node is 81 x 218 points, right? You DO NOT go bigger than this, you hear?”

“Fine,” SpriteKit replied. “But if I’m on an iPhone XR, then I’m getting a sprite that is 162 x 436 pixels and I’m squeezing that sucker in there.”

“OK by me,” I said. “Make it look sharp.”

“Fine. On an iPhone XS Max, I’m getting one that is 243 x 654 pixels, then. Bet it won’t fit.”

“We’ll see,” I said.

Unperturbed, SpriteKit said: “Right, then. On an iPad, I’m going to squeeze a sprite that is 324 x 872 pixels into that tiny 81 x 218 point space. How’d you like me now, bitch?”

“Ah ha ha,” I respond. “I am Walter White, you are but a Jessie. You did what I wanted you to do and now my game looks crispy clean on an iPad Pro.”

And…scene.

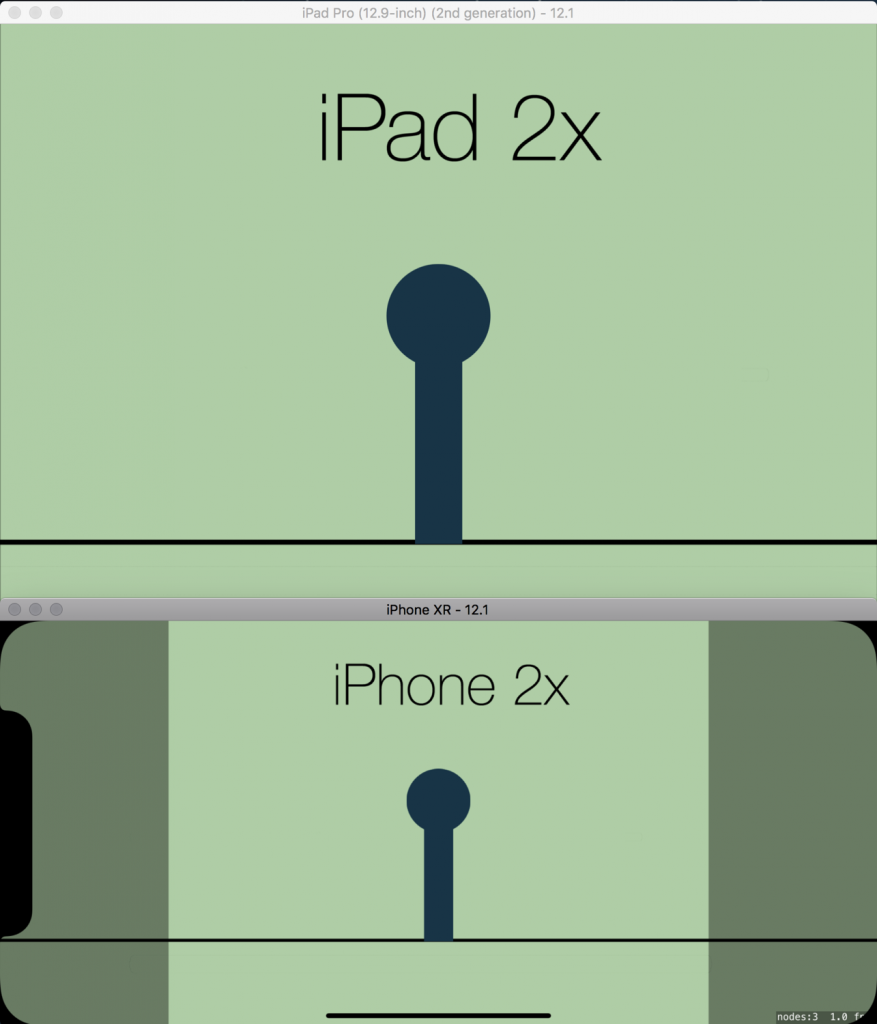

The game scene is defined as being 1110×512 points, which means that it is being scaled up by 4x on an iPad Pro to fit. To stop everything becoming a blurry mess, the textures also need to be 4x the size.

If I didn’t do this, then SpriteKit would use the @2x textures from the Universal bucket in the asset catalog, but these would be sized for a 2219×1024 (1110×512 x 2) screen (half the vertical size of the iPad Pro):

So far, so good.

Now let’s say my little stick dude has had a few too many and falls over. On the iPhone, everything looks great:

Unfortunately, on the iPad:

Oh noes! He got drunk and he hulked out on the way down!

Because the falling animation uses sprites that are different dimensions to the standing up sprite, I wanted to use the SKAction method animate(with:timePerFrame:resize:restore:) as this has an option to automatically resize the sprite based on the texture.

Unfortunately, the iPad textures are at an effective 4x scale. SpriteKit only understands iPads to be, at most, 2x, so it resizes the sprite to be double the size than they should be.

This means that using asset catalogs with iPad-specific buckets is basically a non-starter if I don’t want to maintain a different iPad fork (and I don’t, see below) and I want to use any SpriteKit API that automatically resizes a sprite based on the size of its texture behind the scenes.

Since I don’t know what all of those are, I am basically guaranteeing that there will be bugs.

This Is All Really Confusing

Yeah, it is, and a lot of it goes against much of the orthodoxy in iOS design in general. I’m not supposed to be thinking about pixels, only points. I’m supposed to use the asset catalog buckets and let the system figure it out.

However, in a regular app, I’m able to explicitly lay out iPad designs differently while still providing the same functionality. This doesn’t apply to games. Often, things need to look (or at least be proportionally) the same across all devices, or the very nature of the game changes.

There’s a good reason why so many tutorials say “just size everything at the pixel dimensions of the iPad, put a single iPad-sized asset in the Universal 1x bucket, and don’t think about your memory footprint” (that last bit is often implied).

Conclusion (After Many Hours)

I should go with the iPad Pro size. Create scenes at 4438 x 2048 (or 2732 x 2048 if I want to clip top and bottom on phones), drop assets into the Universal 1x bucket, let SpriteKit scale stuff down on the phones and not worry about it.

If I’m still worried about it, I can do the same but use the regular iPad vertical size (for documents that are 3328 x 1536 in size). Let SpriteKit scale it down for phones, and up slightly for the iPad Pro (but that upscaling is still significantly less than if I was using iPhone@2x-sized assets).

If I really want to get it right, then I have to prepare myself for a world of hurt:

I’ll need to have game scenes of different sizes. This will mean doing some device detection and using either two SKS files (one for the 1x phone size [1110 x 512] and one at the 1x iPad size [2219 x 1024]) or by passing in different values when setting up the scenes (as I did for the jigsaw puzzle example).

But wait, there’s more!

I’ll also have to remember that positioning will be different. The two scenes are now different sizes, so a sprite positioned at 300, 300 on the iPhone version would need to be positioned at 600, 600 for the iPad version for it to look like it’s in the same place. If there are entities with speeds defined in points per second, they will also have to be doubled.

I will have to think about whether every property of every entity should be doubled or not.

I will have to think about that when I’m changing any property of any entity.

(OK, so computers are really good at this stuff and I could make a bunch of CGPoint, CGFloat, and CGVector extensions that would make this easier, but it still adds a cognitive burden.)

So, no. Oversized sprites on iPhones it is. SpriteKit seems to be able to handle it. Phones seem to be able to handle it.

It just goes against almost a decade of iOS development experience.

Or am I crazy? Have I completely overlooked or misunderstood something? Forgotten to take something into account? Is there some magic setting that I’m not aware of that would fix all this?

Please let me know if there is!

Please.